Introduction

Imagine you could talk to your robot like you talk to your friend. You could ask it to fetch you a drink, play a game with you, or help you with your homework. Sounds like science fiction, right? Well, not anymore. Thanks to Google’s latest innovation, Palm E, robots can now understand and execute natural language commands from humans. Palm E is an embodied multimodal language model that can process different types of sensor data and generate appropriate responses. In this article, we will explore what Palm E is, how it works, and what it can do for us in the future.

About Palm E

Robots are becoming more and more common in our daily lives. They can vacuum our floors, deliver our packages, and even perform surgery. But what if we could communicate with them more naturally and intuitively? What if we could just tell them what we want them to do, instead of programming them with complex codes and commands?

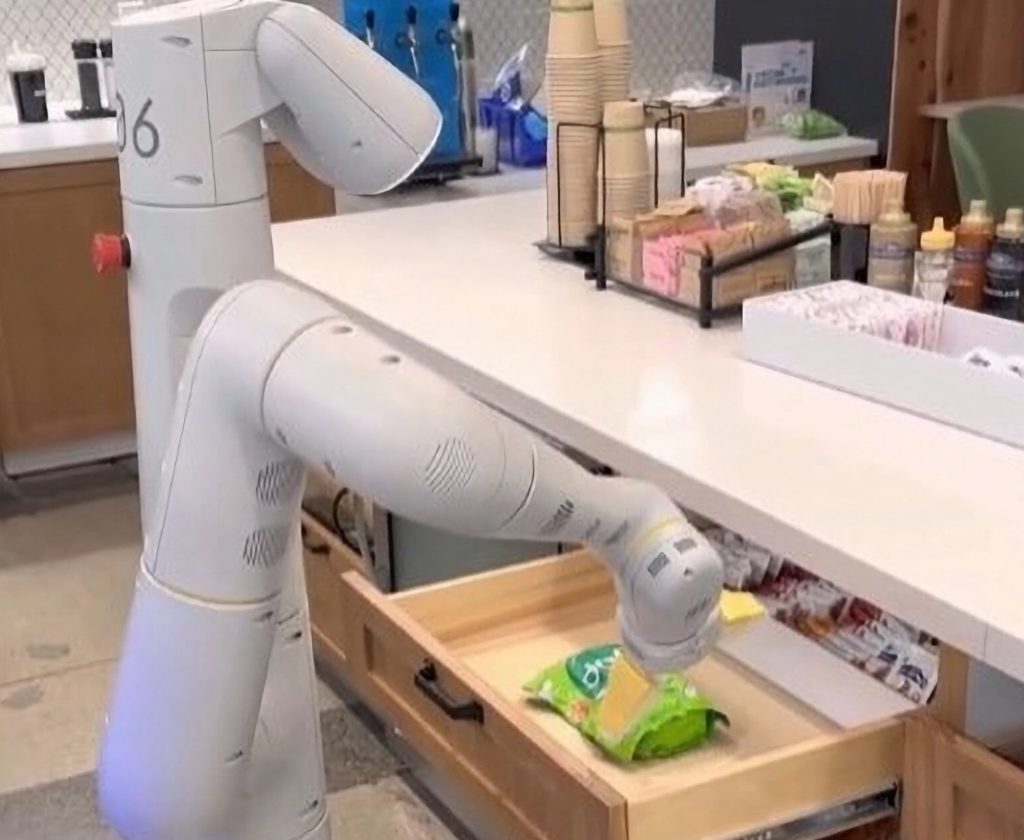

That’s the vision behind Palm E, Google’s new embodied multimodal language model that can understand and generate natural language commands for robots. Palm E is a breakthrough in artificial intelligence that can handle different types of sensor data, such as images and states, and produce appropriate responses. For example, you could ask Palm E to “pick up the red ball” or “go to the kitchen” and it would execute your request.

How does Palm E work?

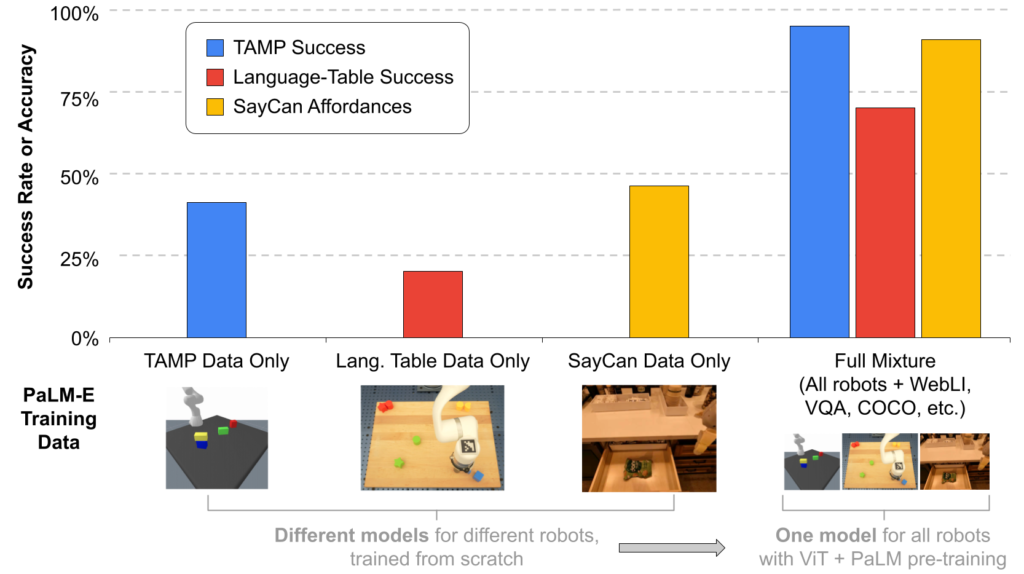

It works by injecting observations into a pre-trained language model. This means that it transforms sensor data, such as images, into a representation that is similar to how words are processed by a language model. Then, it uses this representation to generate textual completions autoregressively given a prefix or prompt.> For example, if you give Palm E an image of a room with some objects and a prompt like “pick up”, it would complete the sentence with something like “the blue book on the table”.

Palm E is based on Google’s existing large language model (LLM) called PaLM, which is similar to the technology behind ChatGPT, Google’s chatbot that can have natural conversations with humans. PaLM-E is an extension of PaLM that makes it embodied and multimodal. This means that it can interact with physical environments and process different kinds of inputs.

What are some applications of Pam E?

Palm E has many potential applications in various domains where natural language communication with robots is desirable. For example, Palm E could be used for:

- Education: Palm E could help students learn new skills or concepts by providing feedback or guidance through natural language commands.

- Entertainment: Palm E could create immersive and interactive experiences for users by controlling virtual or physical characters through natural language commands.

- Healthcare: Palm E could assist patients or caregivers by performing tasks such as fetching medication or monitoring vital signs through natural language commands.

- Industry: Palm E could improve productivity and efficiency by coordinating multiple robots or machines through natural language commands.

Palm E is an exciting innovation that brings us closer to achieving human-like communication with robots. It opens up new possibilities for creating more natural and intuitive interactions between humans and machines. With Palm E, we can talk to our robots like we talk to our friends.

A Game Changer

Embodied AI is a branch of artificial intelligence that aims to create agents that can interact with physical environments and perform tasks that require perception, cognition, and action. Embodied AI has many potential applications in domains such as robotics, gaming, education, healthcare, and entertainment. However, developing embodied AI agents is challenging because they need to process multiple types of sensor data (such as vision, sound, and touch) and communicate with humans using natural language.

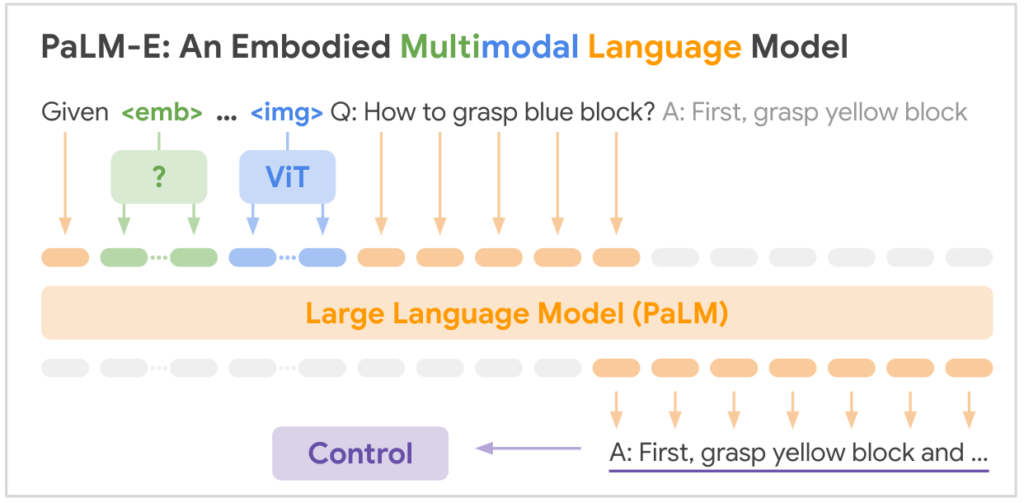

One of the most promising solutions for embodied AI is to use multimodal language models (MLMs) that can integrate different modalities (such as vision and language) and generate natural language commands or responses. MLMs are based on large-scale neural networks that are pre-trained on massive amounts of data from various sources. However, most existing MLMs are limited by their lack of embodiment: they cannot interact with physical environments or control robots.

That’s where Palm E comes in. Palm E is a new embodied multimodal language model developed by Google and the Technical University of Berlin. Palm E is based on Google’s existing large language model (LLM) called PaLM, which is similar to the technology behind ChatGPT, Google’s chatbot that can have natural conversations with humans. PaLM-E is an extension of PaLM that makes it embodied and multimodal. This means that it can interact with physical environments and process different kinds of inputs.

Palm E has several advantages over other MLMs:

- It can handle different types of sensor data, such as images and states. For example, it can take an image of a room with some objects and generate a natural language command for a robot to perform a task in that room.

- It can produce appropriate responses given a prefix or prompt. For example, it can complete a sentence given an image and a partial sentence like “pick up”.

- It can address a variety of embodied reasoning tasks across multiple embodiments. For example, it can control different types of robots or virtual characters using natural language commands.

- It exhibits positive transfer: it benefits from diverse joint training across internet-scale language, vision, and visual-language data without task-specific fine-tuning. This means that it can retain its general language performance while improving its visual-language performance.

Palm E is a game-changer for embodied AI because it enables natural and intuitive communication between humans and machines. With Palm E, we can talk to our robots like we talk to our friends. Palm E opens up new possibilities for creating more immersive and interactive experiences with embodied agents. Palm E also paves the way for further research on multimodal learning and reasoning for embodied AI.

Thoughts

Palm E is more than just a robot brain. It is a breakthrough in embodied AI that can understand and interact with the world using natural language. Palm E is not only a powerful tool for robotics but also a potential companion for humans. With Palm E, we can have conversations with our machines and explore new possibilities for learning, entertainment, and creativity. Palm E is the future of embodied AI, and it’s ready to talk to you.